Let’s play Ten for Ten! To commemorate the ten-year anniversary of this blog, which launched on June 26, 2014, here’s an appreciation for ten of my formative cinematic influences—an examination of why these movies resonated with me when I first saw them, and how they permanently informed my aesthetic tastes and creative sensibilities. This post is presented in three installments.

“Under the Influence, Part 1” informally ponders through personal example how an artist develops a singular style and voice all their own, and offers an analysis of Quentin Tarantino’s essay collection Cinema Speculation, the auteur’s critical look at the movies of the ’70s that inspired him.

In “Under the Influence, Part 2,” I spotlight five films from my ’80s childhood that shaped my artistic intuition when at its most malleable.

And in “Under the Influence, Part 3,” I round out the bill with five selections from my ’90s adolescence, the period during which many of the themes that preoccupy me crystalized.

It takes an unholy degree of time and stamina to write a book. Consequently, it’s advisable to have a really good reason to take a given project on—then see it through to the finish line. Before typing word one of a new manuscript, it behooves us to ask (and answer): Why is this project worth the herculean effort required to bring it into existence?

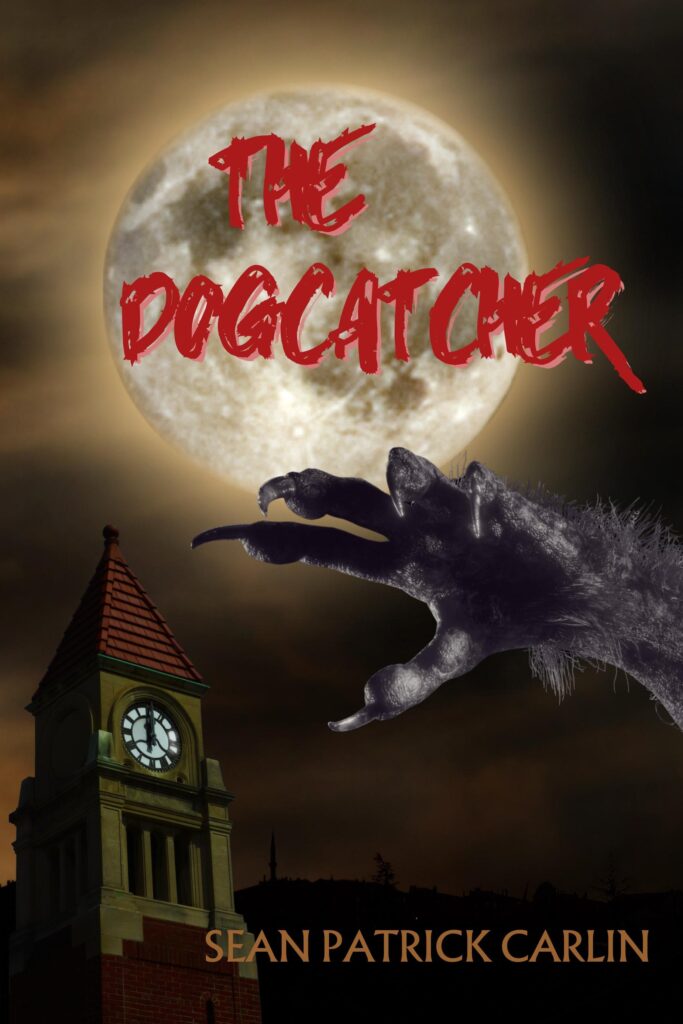

I wrote my debut novel The Dogcatcher (2023) for the most elemental of motives: I ached for the kind of bygone horror/comedies on which I’d come of age in the ’80s, an era that produced such motley and memorable movies as An American Werewolf in London (1981), The Evil Dead (1981), Gremlins (1984), Ghostbusters (1984), The Witches of Eastwick (1987), The Lost Boys (1987), The Monster Squad (1987), The ’Burbs (1989), and Tremors (1990). Where have those kinds of movies gone? I wondered.

Hollywood, to be fair, hadn’t stopped making horror/comedies, it only long since stopped making them with any panache. I have spent many a Saturday night over the past decade in a binge-scrolling malaise, surfing numbly through hundreds of viewing options on Netflix or Prime or Hulu or whatever, when suddenly my inner adolescent’s interest is piqued—as though I were back at the old video store and had found a movie right up my alley.

I certainly sensed the stir of possibility in Vampires vs. the Bronx (2020), about a group of teenagers from my hometown battling undead gentrifiers. Night Teeth (2021), featuring bloodsuckers in Boyle Heights, seemed equally promising. And Werewolves Within (2021) is set in a snowbound Northeastern United States township already on edge over a proposed pipeline project when its residents find themselves under attack by a werewolf.

All of a sudden, I felt like that sixteen-year-old kid who saw the one-sheet for Buffy the Vampire Slayer (1992) while riding the subway to work—“She knows a sucker when she sees one,” teased the tagline, depicting a cheerleader from the neck down with a wooden stake in her fist—and knew he was in for a good time at the cinema.

No such luck. Vampires vs. the Bronx, in an act of creative criminality, pisses away a narratively and thematically fertile premise through flat, forgettable execution.

Night Teeth, meanwhile, answers the question: How about a movie set in the same stomping ground as Blade (1998)—inner-city L.A., clandestine vampiric council calling the shots—only without any of its selling-point stylistics or visual inventiveness?

And Werewolves Within establishes an intriguing environmental justice subplot the screenwriter had absolutely no interest in or, it turns out, intention of developing—the oil pipeline isn’t so much a red herring as a dead herring—opting instead for a half-assed, who-cares-less whodunit beholden to all the standard-issue genre tropes.

Faced with one cinematic disappointment after another, it seemed the only way to sate my appetite for the kind of horror/comedy that spoke to me as a kid was to write my own.

On the subject of kids—specifically, stories about twelve-year-old boys—I haven’t seen one of those produced with any appreciable measure of emotional honesty or psychological nuance since Rob Reiner’s Stand by Me (1986), based on Stephen King’s 1982 novella The Body. That was forty years ago!

Storytellers know how to write credible children (E.T. the Extra-Terrestrial, Home Alone, Room), and they know how to write teenagers (The Outsiders, Ferris Bueller’s Day Off, Clueless), but preadolescent boys are almost invariably reduced to archetypal brushstrokes (The Goonies, The Sandlot, Stranger Things). The preteen protagonists of such stories are seldom made to grapple with the singular emotional turbulence of having one foot in childhood—still watching cartoons and playing with action figures—and the other in adolescence—beginning to regard girls with special interest, coming to realize your parents are victims of generational trauma that’s already in the process of being passed unknowingly and inexorably down to you.

For all of popular culture’s millennia-long fixation on and aggrandizement of the heroic journey of (usually young) men, our commercial filmmakers and storytellers either can’t face or don’t know how to effectively dramatize the developmental fulcrum of male maturation. George Lucas’ experimental adventure series The Young Indiana Jones Chronicles (1992–1996) sheds light on Indy’s youth from ages eight through ten (where he’s portrayed by Corey Carrier) and then sixteen through twenty-one (Sean Patrick Flanery); the complicated messiness of pubescence, however, is entirely bypassed. Quite notably, those are the years in which Indy’s mother died and his emotionally distant father retreated into his work—formative traumas that shaped, for better and worse, the adult hero played by Harrison Ford in the feature films.

Lucas’ elision seems odd to me—certainly a missed creative opportunity1—given that twelve-going-on-thirteen is the period of many boys’ most memorable and meaningful adventures. King and Reiner never forgot that, and neither did I, hence the collection of magical-realism novellas I’m currently writing that explore different facets of that transitory experience: going from wide-eyed wonder to adolescent disillusionment as a result of life’s first major disappointment (Spex); being left to navigate puberty on your own in the wake of divorce (The Brigadier); struggling to understand when, how, and why you got socially sorted at school with the kids relegated to second-class citizenry (H.O.L.O.).

This single-volume trilogy, I should note, isn’t YA—these aren’t stories about preteens for preteens. Rather, they are intended, like The Body/Stand by Me before them, as a retrocognitive exercise for adults who’ve either forgotten or never knew the experience of being a twelve-year-old boy to touch base with that metamorphic liminality in all of its psychoemotional complexity. They’re very consciously stories about being twelve as reviewed from middle-aged eyes.

As I’ll demonstrate in “Part 2” and “Part 3,” both that WIP and The Dogcatcher take inspiration—narratively, thematically, aesthetically, referentially—from the stories of my youth, the books and movies that first kindled my imagination and catalyzed my artistic passions.

Continue reading

Recent Comments